What’s SDS (Software-Defined Storage) – Part 2 (Ceph)

In the previous post, I have reviewed Software-Defined Storage concept and its benefits. At this part, we want to review one of open-source solutions for software-defined storage. As reducing cost is one of most important goal for migrating from other storage solutions to software-defined storage, using an open-source solution will help you to achieve that.

What’s Ceph?

Ceph is a distributed object store and file system designed to provide excellent performance, reliability and scalability as a SDS or Software-Defined Storage. The name “Ceph” is an abbreviation of “cephalopod”, a class of molluscs that includes the octopus.

Introduction to Ceph

Whether you want to provide Ceph Object Storage and/or Ceph Block Device services to Cloud Platforms, deploy a Ceph Filesystem or use Ceph for another purpose, all Ceph Storage Cluster deployments begin with setting up each Ceph Node, your network, and the Ceph Storage Cluster. A Ceph Storage Cluster requires at least one Ceph Monitor, Ceph Manager, and Ceph OSD (Object Storage Daemon). The Ceph Metadata Server is also required when running Ceph Filesystem clients.

- Monitors: A Ceph Monitor (

ceph-mon) maintains maps of the cluster state, including the monitor map, manager map, the OSD map, and the CRUSH map. These maps are critical cluster state required for Ceph daemons to coordinate with each other. Monitors are also responsible for managing authentication between daemons and clients. At least three monitors are normally required for redundancy and high availability. - Managers: A Ceph Manager daemon (

ceph-mgr) is responsible for keeping track of runtime metrics and the current state of the Ceph cluster, including storage utilization, current performance metrics, and system load. The Ceph Manager daemons also host python-based plugins to manage and expose Ceph cluster information, including a web-based Ceph Manager Dashboard and REST API. At least two managers are normally required for high availability. - Ceph OSDs: A Ceph OSD (object storage daemon,

ceph-osd) stores data, handles data replication, recovery, rebalancing, and provides some monitoring information to Ceph Monitors and Managers by checking other Ceph OSD Daemons for a heartbeat. At least 3 Ceph OSDs are normally required for redundancy and high availability. - MDSs: A Ceph Metadata Server (MDS,

ceph-mds) stores metadata on behalf of the Ceph Filesystem (i.e., Ceph Block Devices and Ceph Object Storage do not use MDS). Ceph Metadata Servers allow POSIX file system users to execute basic commands (likels,find, etc.) without placing an enormous burden on the Ceph Storage Cluster.

Ceph stores data as objects within logical storage pools. Using the CRUSH algorithm, Ceph calculates which placement group should contain the object, and further calculates which Ceph OSD Daemon should store the placement group. The CRUSH algorithm enables the Ceph Storage Cluster to scale, rebalance, and recover dynamically.

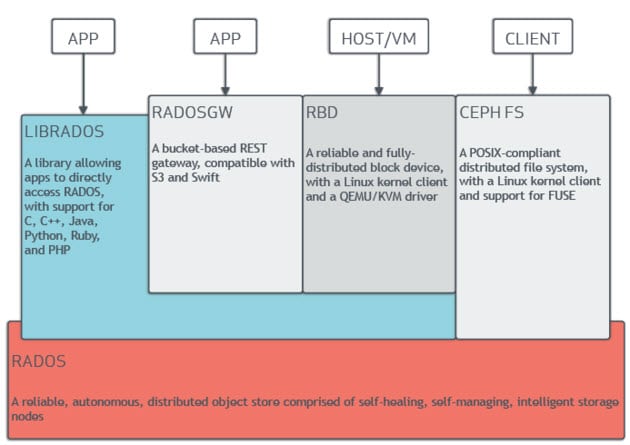

The power of Ceph can transform your organization’s IT infrastructure and your ability to manage vast amounts of data. If your organization runs applications with different storage interface needs, Ceph is for you! Ceph’s foundation is the Reliable Autonomic Distributed Object Store (RADOS), which provides your applications with object, block, and file system storage in a single unified storage cluster—making Ceph flexible, highly reliable and easy for you to manage.

Ceph’s RADOS provides you with extraordinary data storage scalability—thousands of client hosts or KVMs accessing petabytes to exabytes of data. Each one of your applications can use the object, block or file system interfaces to the same RADOS cluster simultaneously, which means your Ceph storage system serves as a flexible foundation for all of your data storage needs. You can use Ceph for free, and deploy it on economical commodity hardware. Ceph is a better way to store data.

Ceph’s CRUSH algorithm liberates storage clusters from the scalability and performance limitations imposed by centralized data table mapping. It replicates and rebalances data within the cluster dynamically eliminating this tedious task for administrators, while delivering high-performance and infinite scalability.

Ceph uniquely delivers object, block, and file storage in one unified system.

Ceph Object Storage

Ceph’s software libraries provide client applications with direct access to the RADOS object-based storage system, and also provide a foundation for some of Ceph’s advanced features, including RADOS Block Device (RBD), RADOS Gateway (RGW), and the Ceph File System (CephFS).

Ceph Object Gateway is an object storage interface built on top of librados to provide applications with a RESTful gateway to Ceph Storage Clusters. Ceph Object Storage supports two interfaces:

- S3-compatible: Provides object storage functionality with an interface that is compatible with a large subset of the Amazon S3 RESTful API.

- Swift-compatible: Provides object storage functionality with an interface that is compatible with a large subset of the OpenStack Swift API.

Benefits are as follows:

- RESTful Interface

- S3- and Swift-compliant APIs

- S3-style subdomains

- Unified S3/Swift namespace

- User management

- Usage tracking

- Striped objects

- Cloud solution integration

- Multi-site deployment

- Multi-site replication

Ceph Block Device

Ceph’s object storage system isn’t limited to native binding or RESTful APIs. You can mount Ceph as a thinly provisioned block device! When you write data to Ceph using a block device, Ceph automatically stripes and replicates the data across the cluster. Ceph’s RADOS Block Device (RBD) also integrates with Kernel Virtual Machines (KVMs), bringing Ceph’s virtually unlimited storage to KVMs running on your Ceph clients.

Ceph RBD interfaces with the same Ceph object storage system that provides the librados interface and the Ceph FS file system, and it stores block device images as objects. Since RBD is built on top of librados, RBD inherits librados capabilites, including read-only snapshots and revert to snapshot. By striping images across the cluster, Ceph improves read access performance for large block device images. Benefits are as follows:

- Thin-provisioned

- Images up to 16 exabytes

- Configurable striping

- In-memory caching

- Snapshots

- Copy-on-write cloning

- Kernel driver support

- KVM/libvirt support

- Back-end for cloud solutions

- Incremental backup

- Disaster recovery (multisite asynchronous replication)

Ceph FileSystem

Ceph’s object storage system offers a significant feature compared to many object storage systems available today: Ceph provides a traditional file system interface with POSIX semantics. Object storage systems are a significant innovation, but they complement rather than replace traditional file systems. As storage requirements grow for legacy applications, organizations can configure their legacy applications to use the Ceph file system too! This means you can run one storage cluster for object, block and file-based data storage.

Ceph’s file system runs on top of the same object storage system that provides object storage and block device interfaces. The Ceph metadata server cluster provides a service that maps the directories and file names of the file system to objects stored within RADOS clusters. The metadata server cluster can expand or contract, and it can rebalance the file system dynamically to distribute data evenly among cluster hosts. This ensures high performance and prevents heavy loads on specific hosts within the cluster. Benefits are as follows:

- POSIX-compliant semantics

- Separates metadata from data

- Dynamic rebalancing

- Subdirectory snapshots

- Configurable striping

- Kernel driver support

- FUSE support

- NFS/CIFS deployable

- Use with Hadoop (replace HDFS)

Ceph Architecture

Ceph uniquely delivers object, block, and file storage in one unified system. Ceph is highly reliable, easy to manage, and free. The power of Ceph can transform your company’s IT infrastructure and your ability to manage vast amounts of data. Ceph delivers extraordinary scalability–thousands of clients accessing petabytes to exabytes of data. A Ceph Node leverages commodity hardware and intelligent daemons, and a Ceph Storage Cluster accommodates large numbers of nodes, which communicate with each other to replicate and redistribute data dynamically.

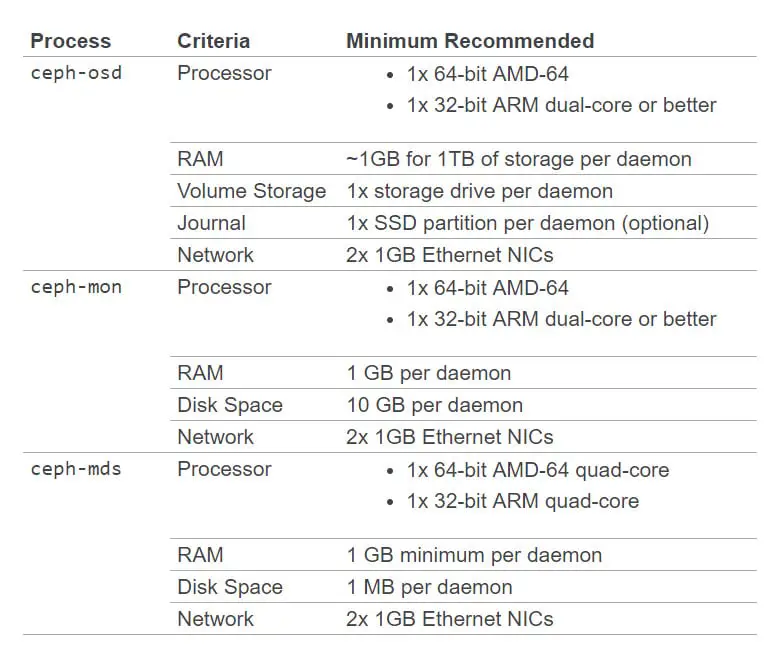

Minimum Hardware Recommendations

Ceph can run on inexpensive commodity hardware. Small production clusters and development clusters can run successfully with modest hardware.

Further Reading

What’s SDS (Software-Defined Storage) – Part 1 (Overview)

External Links

SUSE Enterprise Storage (Ceph)