Kube-Burner: Ignite Your Kubernetes Performance Optimization

Everything in the globe, from straightforward webpages to intricate AI applications, is powered by Kubernetes. However, even the most resilient Kubernetes cluster can fail when put under stress, much like a powerful engine. Presenting Kube-burner, a powerful tool that lets you maximize, stress-test, and realize the whole potential of your Kubernetes setup.

This thorough manual dives deeply into the Kube-burner universe, examining its features, advantages, and real-world uses. This blog article will provide you with the knowledge to push your cluster to its limits, find bottlenecks, and create a more robust and scalable infrastructure—regardless of your level of experience with Kubernetes.

What is Kube-Burner?

Built with fire in its name and efficiency in its core, Kube-burner is an open-source, Golang-based framework designed to orchestrate performance and scale testing for Kubernetes clusters. It acts as a conductor, meticulously coordinating the creation, deletion, and modification of Kubernetes resources at scale. This intentional “burning” process puts your cluster through its paces, exposing its strengths and weaknesses in a controlled environment.

What can Kube-Burner do?

Kube-burner boasts a multi-faceted arsenal of capabilities that empower you to:

- Stress Test the Control Plane and Runtime: By spawning a multitude of Kubernetes objects like pods, deployments, and services, Kube-burner simulates real-world workloads and identifies potential bottlenecks within the Kubernetes control plane and runtime environment.

- Benchmark Performance: Measure critical metrics like pod creation time, API latency, and scheduling efficiency through various workload types, providing valuable insights into your cluster’s performance characteristics.

- Establish Baselines and Track Progress: It helps you create a baseline performance profile for your cluster. This serves as a reference point for future tests, allowing you to track improvements and regression points over time.

- Compare Different Kubernetes Distributions: Evaluate the performance of different Kubernetes distributions (e.g., vanilla Kubernetes vs. OpenShift) under load, aiding in selecting the optimal solution for your specific needs.

- Set Service Level Objectives (SLOs): Using the insights gleaned from tests, you can define realistic and measurable SLOs, ensuring consistent performance and user experience for your applications.

- Identify Scalability Limits: It helps you discover the upper bound of your cluster’s resource capacity, allowing you to plan for future growth and scaling requirements proactively.

Benefits of using Kube-Burner

Integrating Kube-burner into your Kubernetes workflow offers a multitude of benefits:

- Enhanced Performance: Uncover bottlenecks and optimize your cluster configuration for improved performance and responsiveness.

- Increased Reliability: Proactive testing with Kube-burner helps catch potential issues before they impact production environments, leading to a more reliable and stable Kubernetes deployment.

- Greater Confidence: By understanding the performance limits of your cluster, you gain the confidence to deploy and scale your applications effectively.

- Cost Optimization: Identify resource inefficiencies and optimize resource allocation, potentially leading to cost savings.

- Improved Decision Making: Data-driven insights from Kube-burner empower you to make informed decisions about infrastructure investments and scaling strategies.

Putting Kube-Burner into Action

Putting Kube-burner to work is a straightforward and user-friendly process:

1. Setting Up Kube-burner:

The installation process is simple and involves deploying it as a pod within your Kubernetes cluster. Refer to the official documentation for detailed instructions.

2. Defining Workloads:

It allows you to define various workloads through YAML configurations. These configurations specify the type and number of resources to be created and manipulated during the test.

3. Running the Test:

Once the workload is defined, you can execute Kube-burner using the kube-burner run command. This command kicks off the test and generates detailed reports upon completion.

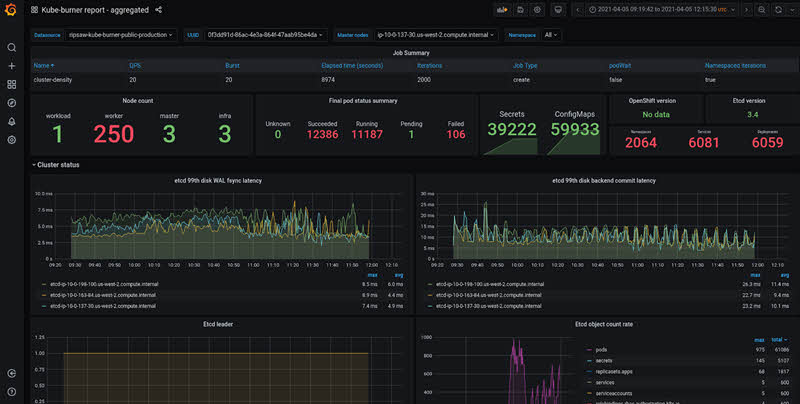

4. Analyzing Results:

It generates comprehensive reports which visualize key performance metrics like API latency, pod creation time, and resource utilization. You can leverage these reports to identify bottlenecks and optimize your cluster configuration.

Advanced Use Cases

Kube-burner provides advanced functionalities for experienced users:

- Prometheus Integration: Kube-burner seamlessly integrates with Prometheus, allowing you to collect and analyze detailed performance metrics during the test run.

- Alerting: Configure Kube-burner to trigger alerts if critical thresholds are breached during testing, enabling you to proactively address potential issues.

- Custom Workloads: Kube-burner allows you to develop custom workload definitions tailored to your specific testing needs.

Comparison with Similar Tools and Applications

While Kube-burner shines in its specific functionality, several other tools offer similar capabilities for Kubernetes performance testing and benchmarking. Below, we compare it with some prominent alternatives:

| Feature | Kube-Burner | Kubestorm | Locust | Siege |

|---|---|---|---|---|

| Scope | Kubernetes Cluster Performance and Scale Testing | Kubernetes Cluster Performance Testing | Distributed Load Testing Tool | HTTP Load Testing Tool |

| Focus | System-level metrics, control plane, and runtime performance | System-level and application-level metrics | User behavior simulation and load generation | HTTP load generation and performance testing |

| Workload Capabilities | Predefined workloads and custom workload definitions | Predefined workloads and custom workload definitions | Extensive range of predefined user simulations | Limited to HTTP requests |

| Metrics Collection | Integrates with Prometheus for detailed system-level metrics | Collects basic system-level metrics | Collects user behavior-related metrics | Collects basic HTTP request-response metrics |

| Alerting | Supports configuring alerts based on Prometheus expressions | Limited alerting capabilities | Extensive alerting capabilities | Limited alerting capabilities |

| Ease of Use | User-friendly with YAML configurations and easy command-line interface | Relatively user-friendly with YAML configurations and web interface | Requires scripting knowledge | Straightforward command-line interface |

| Open Source | Yes | Yes | Yes | Yes |

Key Points to Consider When Choosing a Tool

- Primary purpose: Identify whether you require system-level testing like Kube-burner or application-level performance testing offered by tools like Locust.

- Required level of control: Choose a tool that offers the level of customization (e.g., custom workloads) you need for your specific testing scenarios.

- Technical expertise: Evaluate the tools based on the level of scripting or configuration knowledge required for operation.

- Integration needs: Consider compatibility with other tools like Prometheus or monitoring systems you might use.

Conclusion

Kube-burner stands as a powerful and versatile tool for orchestrating and analyzing Kubernetes cluster performance. By utilizing its comprehensive features and comparing it with its peers, you can make an informed decision about the best fit for your specific testing needs. Remember, the choice of tool ultimately depends on the unique requirements and goals of your particular Kubernetes environment.

Further Reading

Canonical Kubernetes vs. Native: Unmasking the Cloud Champions

MicroCeph: Big Data, Tiny Setup. Where Simplicity Scales Your Storage to the Stars

Deep Dive into Kubeflow: The Architect of the AI Revolution

OKD: Unleashing the Power of Kubernetes for Open-Source Innovation