Optimizing Data Protection: Unleashing the Power of Database Backup Best Practices in Virtualization Platforms

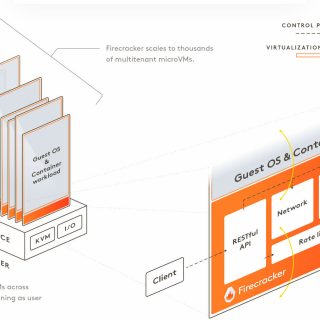

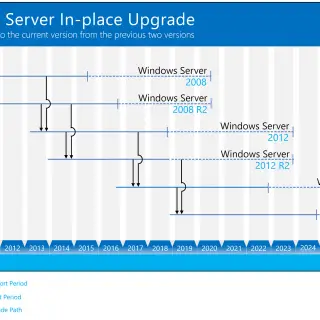

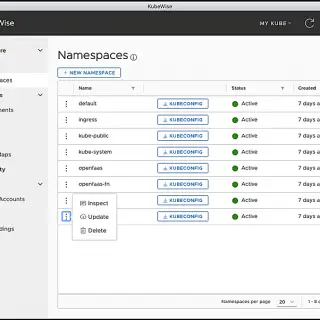

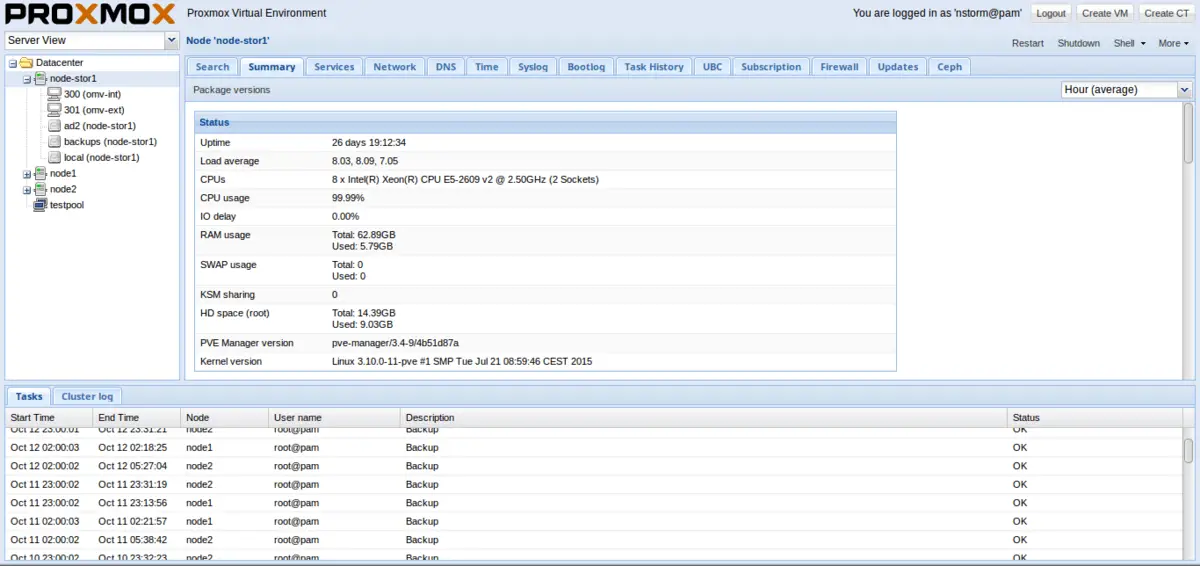

Virtualization platforms such as VMware vSphere or Linux KVM supporting large scale of virtual machines now. Even, virtualization platforms can host monster virtual machines as database server. At this post, we’ll review database backup solutions in virtualization platforms about advantages and disadvantages.